The hype around AI could lead you to believe that organizations are well on their way to achieving their projected ROI. But the reality is more sobering: A 2025 S&P Global report revealed that among enterprises using gen AI, only 27% had organization-wide adoption, while 33% of initiatives remained at the department or project scope level.

To address this trend, CEOs are increasingly tasking the COO, CHRO, CFO and CTO to drive company-wide AI strategies collaboratively. And HR is uniquely positioned to step up as a powerful and strategic partner in this process. While conversations about AI often focus on job disruption and operational efficiency, HR’s role is more expansive—championing AI’s potential to transform business and workforce outcomes positively and sustainably.

HR’s focus on enterprise adoption strategies is also affecting the function’s own internal AI adoption success rate. Research from Sapient Insights Group found that while the adoption of standalone AI and machine learning (ML) within HR processes surged by 90% last year and is expected to increase another 30% this year, formal AI adoption within HR processes is anticipated to remain under 50% across organizations heading into 2026.

HR’s careful pace is not a sign of reluctance but strategic intentionality, driven by the need to:

- prioritize alignment between AI initiatives and enterprise ROI;

- adapt talent strategies to new AI business models;

- manage data privacy, regulatory and ethical risks; and

- address challenges in legacy HR data systems.

3 ways to scale HR’s AI impact

In this two-part series, we explore how HR can navigate these issues and play a central role in building responsible and impactful enterprise-level AI strategies. Here, we focus on the core capabilities HR must develop for cross-functional AI leadership:

Enterprise operating model AI adoption

Recognize and adapt to the speed and scale at which AI is being adopted in your business models—and anticipate the workforce implications accordingly. For instance, look to healthcare’s accelerated breakthroughs, to technology firms using AI to enhance productivity among their highest-performing teams, to call centers encountering limitations in fully automating human interactions as illustrated by Klarna’s experience. .

Strategic AI governance

Define clear, cross-functional policies for AI agent management, including decision-making authority, accountability and compliance, ensuring alignment among IT, finance and legal stakeholders.

Proactive risk management

Scenario plan to address critical risks such as skill commoditization and the proliferation of “bring your own AI” tools, and maintain robust governance over complex AI systems that span multiple organizational platforms.

Building an agile operating model

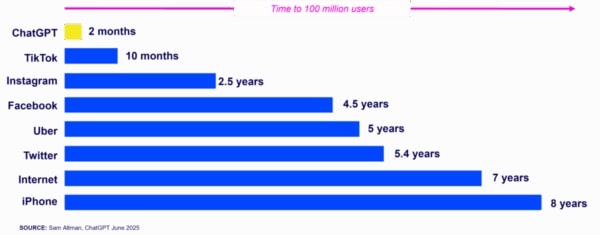

AI adoption by individuals has outpaced any prior technology, as they use it in their everyday lives for tasks like planning vacations, creating content and problem-solving.

However, this rapid personal adoption doesn’t always carry over to a cohesive workplace adoption strategy. Employees struggle to connect personal AI benefits to their professional roles, or they fear negative job impacts. West Monroe client case studies have revealed several common themes that stand in the way of full employee adoption that drives meaningful enterprise-wide value realization.

- Lack of use case understanding: Gaps in understanding of investments and expectations for employee use in daily work.

- Unclear role expectations: Lack of clarity on what work an employee should own and what is better left for AI, at times leading to fear of displacement.

- Limited trust in results: Employees are skeptical due to the potential for mistakes or broad categorizations of insights with limited context.

- Loss of human element: Perceived loss of human touch when interacting with AI for customer service, decision-making and other people-centered activities.

- Limited leader support: Leaders promote use of AI, but fail to provide training or advocate specific use cases and their value to the business.

- Concerns about data privacy: Lack of understanding of how AI tools are using and storing sensitive data; lack of knowledge in regulations and possible risks to the company.

Organizations must bridge the gap between employees’ personal familiarity with AI tools and the professional benefits of using similar tools embedded in their work environments. The goal is to focus on familiarity, relevance and ease of use. Implement high-impact use cases within their own areas of expertise, allowing employees to see AI as an ally rather than a challenge.

See also: What are the top findings from Sapient’s HR Systems Survey?

When AI is viewed as just another technology—rather than a transformative capability reshaping entire business models—organizations risk limiting AI’s impact to isolated departments or narrow use cases. By doing so, they miss critical integration opportunities that drive enterprise-wide value. They also often overlook the time and investment needed to refresh their talent strategies to reflect the impact of AI on how work gets done, through redefining future skills and capability requirements.

Most companies now have four options to choose from when identifying the most optimal way to deliver on business priorities:

- employees

- contract labor

- outsourced labor

- automation/AI

Each talent type brings distinct strengths and trade-offs. Building an agile enterprise operating model requires HR to partner with the business to match the work to the most cost-effective and capable blend of resources, with the ability to adjust dynamically as business and regulatory needs evolve. This blended approach not only enhances performance but also supports scalability, innovation and long-term workforce sustainability.

The World Economic Forum’s Future of Jobs Report 2025 projects AI technologies will create 170 million new jobs while displacing 92 million roles by 2030, resulting in a global net gain of 78 million jobs. Additionally, 86% of organizations anticipate dramatic operational transformations from AI within the next five years.

Moving forward, the organization’s talent strategy will need to shift to enabling the workforce to adapt, thrive and deliver results in this transformed environment. This means equipping employees to thrive alongside AI through robust upskilling programs and frameworks that measure AI’s impact on productivity, employee satisfaction and business outcomes. AI affects roles in two key ways—by augmenting human capabilities and automating routine tasks—resulting in lower internal and outsourcing costs.

A framework for determining AI impact and role transformation

Strategic governance as a foundation

Strategic governance as a foundation

Effective governance lays the foundation for ethical, scalable AI use. Strategic approaches focus on creating frameworks, policies and processes that guide responsible development and management of AI systems. A lack of clarity remains a major barrier to AI adoption. According to Sapient Insights Group, over 60% of organizations cite governance, data privacy and ethics as top barriers to unlocking AI’s potential.

A strong framework isn’t just a safeguard—it’s a strategic enabler of long-term success and business impact, driving:

- Better decision-making: Ensuring AI systems are consistently reliable, fair and unbiased

- Protecting brand reputation: Demonstrating a strong commitment to ethical and responsible AI use, safeguarding brand reputation

- Innovation accelerator: Providing a structured process for developing, evaluating and prioritizing AI initiatives enhances internal visibility and minimizes AI hesitation and siloed efforts

- Increased stakeholder trust: Establishing transparency in AI practices to foster confidence among employees, customers and stakeholders

- Data leadership: Maintaining rigorous standards for data integrity, quality and security, to lay the foundation for trusted AI outcomes

To ensure responsible and effective AI use, organizations are establishing robust AI governance frameworks that incorporate:

- Ethical guidelines: Principles for fairness, transparency and responsible AI use

- Roles and responsibilities: Clear ownership for AI oversight and decision-making

- Structured processes: Standard evaluations for AI initiative approvals and management

- Data quality and integrity procedures: Establish data-level criteria and defined roles for maintaining clean, accurate, unbiased and reliable data throughout the AI lifecycle

- Risk and compliance monitoring: Put in place protocols to continuously assess AI outcomes and potential risks. AI systems should be monitored with the same—if not greater—rigor as any other workforce involved in decision-making.

- Ongoing education: Equip stakeholders with the knowledge and tools to engage with AI responsibly and in alignment with your organization’s unique goals and values.

With this foundation in place, organizations can more confidently mitigate AI-related risks while preparing for the opportunities ahead.

Mitigating risks and looking forward

HR leaders must go beyond surface-level concerns about AI and anticipate deeper, systemic risks and the unintended consequences of AI investments today. Scenario planning and ongoing dialogue are critical for managing future disruptions effectively.

Emerging challenges we are already watching include:

- Commoditized talent: AI-driven skill strategies, powered by vast internal and external data, align with HR’s long-term vision: having the right people, in the right roles, at the right time. However, as AI becomes a standard tool across similar business models, we risk commoditizing talent—favoring uniformity over innovation. HR leaders must consider the long-term consequences, including the potential exclusion of individuals who offer unique perspectives, challenge norms or don’t align with AI-optimized profiles for continuous learning or high-level judgment. Are we unintentionally screening out the tactical thinkers or cautious testers simply because they don’t fit an AI-centric mold?

- Bring your own AI: HR leaders must also proactively address the emerging complexities introduced by “bring your own AI” (BYOAI) practices. Just as “bring your own device” policies rapidly became standard from 2013-15, we anticipate a similarly swift adoption of BYOAI. While setting clear guidelines and expectations will be essential for managing security, compliance and ethical risks, HR must also recognize and support employees’ natural inclination to innovate and personalize their work tools. Embracing this human-centric approach can unlock employee creativity and productivity, turning potential risks into strategic advantages.

- AI oversight turf wars: HR must clarify its role in the AI landscape by distinguishing between static responsibilities—like data management and system integration—and the active oversight needed for dynamic, AI-driven workforce systems. It’s essential to define which aspects, such as data accessibility and explainable outcomes, fall under HR or IT, and which evolving elements, like management of autonomous AI agents, require continuous governance. Clear boundaries between technical infrastructure and strategic oversight will enable the business to mitigate risks and harness AI’s potential more responsibly.

By proactively managing these risks, HR can shape a future where AI enhances, rather than disrupts, workforce dynamics.

What’s ahead for AI adoption in HR?

As HR leaders prepare to embrace AI, the key will be integrating it thoughtfully into internal processes and technology systems—once the benefits clearly outweigh the risks. In our next article, we’ll examine how HR is already using AI to streamline workflows, enhance employee experiences and drive meaningful operational efficiencies. We’ll also explore how to evaluate embedded AI within existing HR technology and translate individual AI successes into scalable, functional strategies that add value across the entire HR function—safely and sustainably.

Learn more from Stacey Harris at HR Tech during her megasession on Sept. 18, when she will reveal early findings of Sapient Insights’ 28th Annual HR Systems Survey Key.